Learn how to install an SSL certificate on the Apache webserver running on the Linux machine. Steps include installation, configuration, and verification.

Before we start will SSL certificate steps lets run through below pre-requisite:

- You have an Apache webserver running on your Linux machine.

- You have generated a CSR file and submitted it to the certificate vendor. Read here: steps to generate CSR.

- You have received an SSL certificate file from the vendor.

SSL certificate you received from the certificate vendor should be a filename.crt file. This file can be opened with a text editor and looks like below :

-----BEGIN CERTIFICATE-----

OVowgZYxCzAJBgNVBAYTAk1ZMREwDwYDVQQIDAhTZWxhbmdv

cjEWMBQGA1UEBwwNUGV0YWxpbmcgSmF5YTEdMBsGA1UECgwUTEFGQVJHRSBBU0lB

IFNkbiBCaGQxFTATBgNVBAsMDExhZmFyZ2UgQXNpYTEmMCQGA1UEAwwdY3VzdG9t

ZXJwb3J0YWwubGFmYXJnZS5jb20ubXkwggEiMA0GCSqGSIb3DQEBAQUAA4IBDwAw

ggEKAoIBAQC5MP8NYcSJugZWWcRSKvtFFXaHNDHl9zTocAfKfxmFJyoHATRXPu1A

dRJKE3sKYxW+uEMdfsdpGKaXOv8y+72PEay/V/s3Wiyyv1SEpU1CqPbVkjTRBdmx

A7Xso9tkrBQUIf6ICn+HBZesJ+l2WOWs1xNL/XLx7MEaDKGnhnnxyCF1U7R6J8Bh

QGMHQzdDyXWjIRxyQIJ2VmFB7eJ0OJZUXpWZXTxyZjjQZr22Tr+xN+gu9LjavPxO

lVyDqXJG+V1ouFfk5zG6hXFnQeYzCAGVTpCRss/JW1fBCyTzJj+SEqPDzYj8hwww

RSJlFuGVYmybNW1SCUFxXRoDFjRh04yxAgMBAAGjggJ5MIICdTAoBgNVHREEITAf

gh1jdXN0b21lcnBvcnRhbC5sYWZhcmdlLmNvbS5teTAJBgNVHRMEAjAAMA4GA1Ud

DwEB/wQEAwIFoDBhBgNVHSAEWjBYMFYGBmeBDAECAjBMMCMGCCsGAQUFBwIBFhdo

dHRwczovL2Quc3ltY2IuY29tL2NwczAlBggrBgEFBQcCAjAZDBdodHRwczovL2Qu

c3ltY2IuY29tL3JwYTArBgNVHR8EJDAiMCCgHqAchhpodHRwOi8vc3Muc3ltY2Iu

Y29tL3NzLmNybDAdBgNVHSUEFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwHwYDVR0j

BBgwFoAUX2DPYZBV34RDFIpgKrL1evRDGO8wVwYIKwYBBQUHAQEESzBJMB8GCCsG

AQUFBzABhhNodHRwOi8vc3Muc3ltY2QuY29tMCYGCCsGAQUFBzAChhpodHRwOi8v

c3Muc3ltY2IuY29tL3NzLmNydDCCAQMGCisGAQQB1nkCBAIEgfQEgfEA7wB2AN3r

HSt6DU+mIIuBrYFocH4ujp0B1VyIjT0RxM227L7MAAABVirLn4IAAAQDAEcwRQIg

MiUcoABrkMnSdbc9U4zKUvKijKOsocUbhfeAAbCYFUYCIQCOfhqeLIgADRcxOW+h

HEazFjqFdwIAluchDlXLss3jvFxHpI0Tyg00NIR7kJivaP3

scDCpInpcg/xKTzM8aewc1cmkDM8hm9j2VZ0yQgcc+rd8ZHQibb0M4WAPDel/tFO

5YodvCGJtkLIItei20qtkqZ4fMuW5A

-----END CERTIFICATE-----

Installation :

Using FTP, sftp, etc, copy SSL certificate, intermediate certificate file (if any), and private key file (generated during CSR file generation step above) on Linux machine running Apache webserver. It is advisable to copy these files within the Apache installation directory and furthermore in separate directories if you want to maintain old files archives. For example, if the Apache installation directory is /etc/httpd then you can create a directory /etc/httpd/ssl_certs and keep new/old certificates in it. Same for keys you can create /etc/httpd/ssl_keys and keep new/old key files in it.

Normally certificate and key files should be readable to the owner and group to which Apache users belong.

Configuration :

Login to your Linux machine and navigate to your Apache installation directory where the configuration file resides. Most of the time it’s installed in /etc/httpd/ directory. If you are not where your Apache in installed, identify appropriate Apache instance in ps -ef output (in case multiple Apache instances running on the same machine). To check the Apache configuration file location use below command :

# /usr/sbin/httpd -V

Server version: Apache/2.2.17 (Unix)

Server built: Oct 19 2010 16:27:47

Server's Module Magic Number: 20051115:25

Server loaded: APR 1.3.12, APR-Util 1.3.9

Compiled using: APR 1.3.12, APR-Util 1.3.9

Architecture: 64-bit

Server MPM: Prefork

threaded: no

forked: yes (variable process count)

Server compiled with....

-D APACHE_MPM_DIR="server/mpm/prefork"

-D APR_HAS_SENDFILE

-D APR_HAS_MMAP

-D APR_HAVE_IPV6 (IPv4-mapped addresses enabled)

-D APR_USE_SYSVSEM_SERIALIZE

-D APR_USE_PTHREAD_SERIALIZE

-D SINGLE_LISTEN_UNSERIALIZED_ACCEPT

-D APR_HAS_OTHER_CHILD

-D AP_HAVE_RELIABLE_PIPED_LOGS

-D DYNAMIC_MODULE_LIMIT=128

-D HTTPD_ROOT="/etc/httpd"

-D SUEXEC_BIN="/usr/sbin/suexec"

-D DEFAULT_PIDLOG="logs/httpd.pid"

-D DEFAULT_SCOREBOARD="logs/apache_runtime_status"

-D DEFAULT_LOCKFILE="logs/accept.lock"

-D DEFAULT_ERRORLOG="logs/error_log"

-D AP_TYPES_CONFIG_FILE="conf/mime.types"

-D SERVER_CONFIG_FILE="conf/httpd.conf"

See the last line of above output which will show configuration file (i.e. httpd.conf) location. This is a relative path. The complete absolute path of the config file can be obtained by observing HTTPD_ROOT value in the above output. So complete path for config file will be HTTPD_ROOT/SERVER_CONFIG_FILE i.e. /etc/httpd/conf/httpd.conf in this case.

Once you are able to trace the configuration file, you need to edit this file with a text editor like vi and mention the SSL certificate path. You need to define below three paths. If parameters are already in the file then just edit their paths.

SSLCertificateFile /<path to SSL cert>/filename.crt

SSLCertificateKeyFile /<path to provate key>/private.key

SSLCertificateChainFile /<path to intermediate cert>/intermediate.crt

These paths are the ones where you copied SSL cert, intermediate cert, and private key in the above step. Save and verify changes.

Final step :

You are done with configuration now but Apache instance doesn’t know these changes. You need to restart the Apache instance to take these new changes in action. You can restart Apache with below command :

# /usr/sbin/apachectl -f /<path of conf file>/httpd.conf -k stop

# /usr/sbin/apachectl -f /<path of conf file>/httpd.conf -k start

Verify if Apache is up and running using ps -ef command. If you don’t see Apache instance running then check error.log for troubleshooting. This log file is located under the Apache installation directory under the logs directory. The path can be identified from DEFAULT ERROR_LOG value in the above httpd -V output.

Verification :

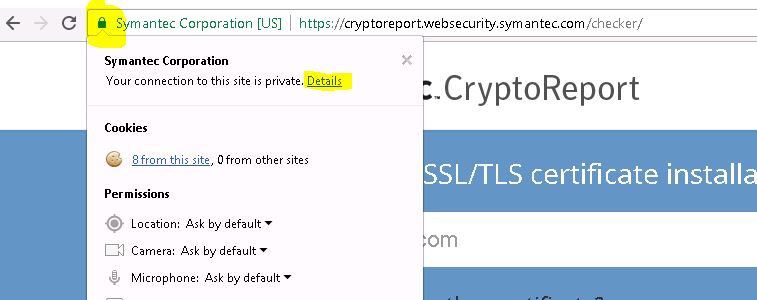

Once Apache is up and running with this new configuration, verify if you installed your certificate correctly or not by visiting this online free tool by Symantec.

Also, you can visit your website/link which is being served by Apache in a fresh browser session and check certificate details by clicking the lock icon in the browser bar. Then clicking details on coming dropdown.

You will be presented with below screen, Click on view certificate to view certificate details.

This will show you below certificate details which include purpose, issue date, expiry date, organization, issuer, etc.